The rapid development of the Big Data Era drives the increasing innovation of Internet, Big Data, Cloud Computing, Artificial Intelligence, Block Chain and other technologies, and the data storage capacity of data-intensive industries, such as finance, Internet, operators and government, shows an explosive growth trend. It has become an important direction in the application of Big Data Warehouse in exploring the way to help enterprises better manage and tap the value of business data from massive data to meet their needs of rapid business development.

Usually adopting distributed computing technology, Big Data Warehouse achieves the storage of massive data with the natural scalability of big data, while converting SQL into the task of big data computing engine to realize data analysis, for example, using Hadoop and Spark as storage and computing engines, and using tools or programming languages to design processing logic to realize the aggregation, cleaning, computing and analysis of different data sources. In addition, Big Data Warehouse has such characteristics as massive data, efficient data query and analysis, data security, and data flexibility.

In China, the data warehouse of local deployment mode is still the first choice of government, finance, energy sectors and large enterprises. With its high throughput, low latency and other characteristics, SSD has gradually become an important carrier of data storage in the Big Data Era, making it an important carrying hardware for the current locally deployed data warehouse products.

In order to better meet the demand for high-performance and high-reliability storage in data-intensive industries, Union Memory joins hands with General Data Technology (hereinafter referred to as GBase), the domestic leader in the field of big data warehousing, to explore new storage solutions for digital transformation of data-intensive industries in the Big Data Era.

GBase Massively Parallel Processing Database Cluster System (“GBase 8a MPP Cluster” for short) is a distributed parallel database cluster with Shared Nothing architecture developed on the basis of GBase 8a series storage databases, which has the characteristics of high performance, high availability and high scalability. It can provide a high cost-performance general computing platform for management of data in all sizes, and is widely used to support various data warehouse systems, BI systems and decision support systems.

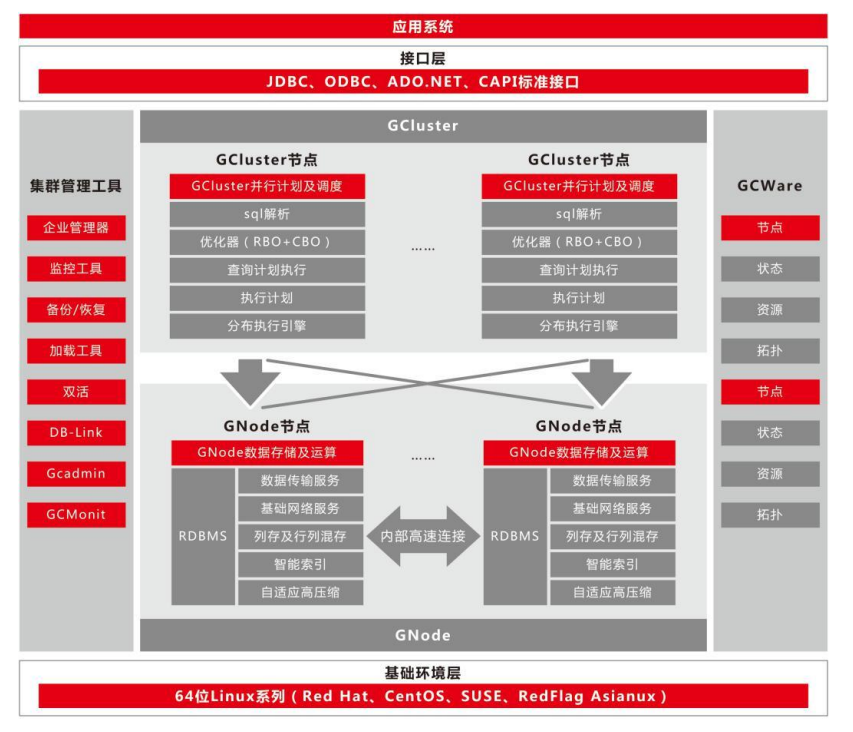

Fig. 1: Schematic Technical Architecture of GBase 8a MPP Cluster

GBase 8a MPP Cluster adopts distributed federated architecture of MPP+Shared Nothing, in which communication among nodes is achieved through TCP/IP networks, and each node stores the data using a local disk. Each node in the GBase 8a MPP Cluster System is relatively independent and self-sufficient, and the whole system has a very high scalability, which is scalable from a few nodes to hundreds of nodes to meet the requirements of growing business scale.

1 Verification Environment

1.1 Hardware Configuration for the Verification

|

Type |

Model |

Hardware Configuration |

Remark |

|

Server |

2U2 Channel (X86) |

CPU: Intel® Xeon® Gold 6330 CPU@2.00GHz*2 |

3 Sets |

|

Memory: 8*32GB |

|||

|

Memory Controller: Support RAID 5 (Data Disk) |

|||

|

Network Card: 1*2 Ports 10GE Ethernet Card |

|||

|

Hard Disk |

Union Memory SSD |

System Disk: 2*480GB SATA SSD Data Disk: 6*3.84TB SAS SSD(UM511a) |

|

|

Exchange |

10GE Exchange |

48 Ports 10GE Exchange |

/ |

1.2 Software Configuration for the Verification

|

Type |

Model |

Version |

Remark |

|

OS |

redhat (x86) |

7.9 |

/ |

|

GBase Version |

GBase 8a |

GBase8a_MPP_Cluster-License-9.5.3.14 |

/ |

|

Client |

gccli |

9.5.3.14 |

/ |

|

Database Pressure Testing |

TPC-DS |

3.2.0rc1 |

Open Source |

|

Database Pressure Testing |

TPC-H |

3.0.0 |

Open Source |

|

Network Monitoring |

SAR |

10.1.5 |

OS Built-in |

|

IOSTAT |

Disk-side IO Statistics |

10.0.0 |

/ |

|

MPSTAT |

CPU Utilization |

10.1.5 |

/ |

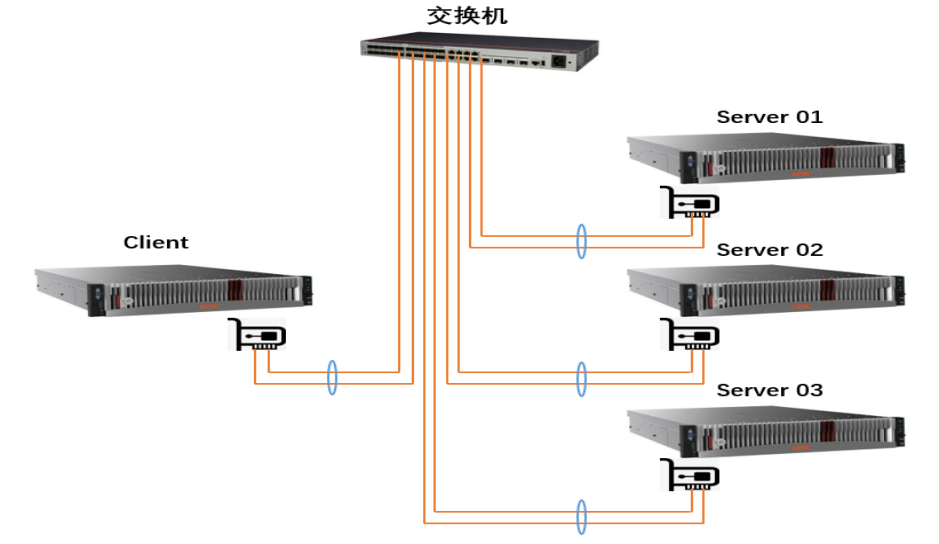

1.3 Networking Planning for the Verification

Fig. 2: GBase 8a MPP Networking Planning Architecture

2 Verification Method

Step 1: Configure 6 SAS UM511a SSDs Raid5.

Step 2: Create Database and Shardings

Use TPC-H tool to evaluate the database analysis and processing capabilities, create the database and 8 TPC-H built-in shardings in advance;

Use TPC-DS tool to evaluate the database analysis and processing capabilities, create the database and the 25 TPC-DS built-in shardings in advance.

Step 3: Parameters Tuning

Tune relevant parameters as per the suggestions of GBase.

Step 4: Data Generation

TCH-H can generate required test data by setting parameters through the dbgen tool. The command parameter is /dbgen -C 10 -S 1 -s 3000 –vf;

TPC-DS can generate required test data by setting parameters through the dsdgen tool. The command parameter is dsdgen -scale 3000 -dir testdata -force -parallel 10 -child 1.

Step 5: Data Loading

When importing the data, an FTP server is built on the local node of the GBASE cluster and data is loaded through FTP. When a sharding contains multiple data files, the multiple data files of a single sharding are integrated into a single import sentence, and the test data is separately loaded into 8 database shardings at the granularity of a single sharding.

Step 6: SQL Execution

Independent client communicates with GBase Cluster through service plane network, and uses gccli tool to execute TPC-H 22 SQL use cases, reference command is as follows:

/home/GBase/gccli_install/gcluster/server/bin/gccli -h 10.28.100.38 -uroot -Dtpch -vvv < query_1.sql.

3 Verification Results

The Verification Results under GBase 8a MPP scenario are as follows:

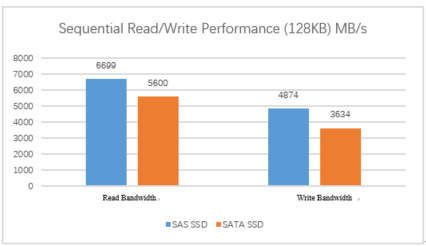

3.1 Performance of Union Memory SAS SSD under Raid Logical Volume Bandwidth Performance Testing

Fig. 3: Performance of Union Memory SAS SSD under Raid Logical Volume Bandwidth Performance Testing

In this test, 6 SAS SSDs and 12 SATA SSDs are configured with Raid5 and Raid50, respectively. The FIO test tool is used for pressure testing of 128KB sequential read/write bandwidth performance on the Server host. As shown in Fig. 3, SAS SSDs have better read/write bandwidth. The read bandwidth is increased by about 19.6% and the write bandwidth is increased by about 34% compared with SATA, showing the absolute advantage of SAS SSDs under the Raid logical volume bandwidth.

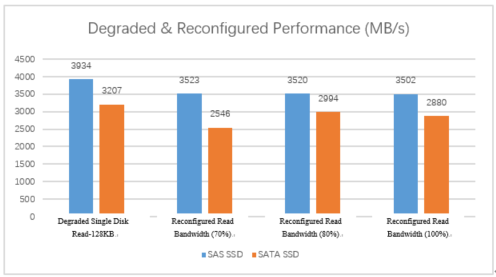

3.2 Performance of Union Memory SAS SSD under Degraded Single Disk at Fault & Reconfigured Write Performance

Fig. 4: Performance of Union Memory SAS SSD under Degraded Single Disk at Fault & Reconfigured Write Performance

The Read Performance of Degraded Single Disk at Fault refers to the read service performance of the logical volume of the RAID group when a single disk is at fault or plugged out. The Reconfigured Performance refers to the service-side performance when the RAID group simultaneously performs data reconfiguration and service delivery on the hot spare disk when a single disk is at fault. This test is performed when 6 Union Memory SAS SSDs are configured with Raid5 and one SSD fails.

As shown in Fig. 4, SAS SSDs perform better than SATA SSDs both in the read performance of degraded single disk at fault and in the reconfigured read bandwidth. The read performance of degraded single disk at fault is about 22.7% better than that of SATA SSDs, and the reconfigured read bandwidth performance is about 38.4% higher than that of SATA SSDs.

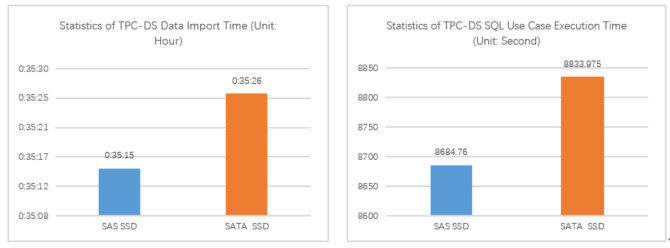

3.3 Performance of Union Memory SAS SSD under TPC-DS Scenario

Fig. 5: Performance of Union Memory SAS SSD under TPC-DS Scenario

TPC-DS test is to load data to GBase database shardings via FTP protocol. As can be seen from Fig. 5, under the same physical hardware environment, Union Memory SAS SSDs take slightly less time than SATA SSDs in both data import and SQL use case execution, and the former have a certain advantage in time.

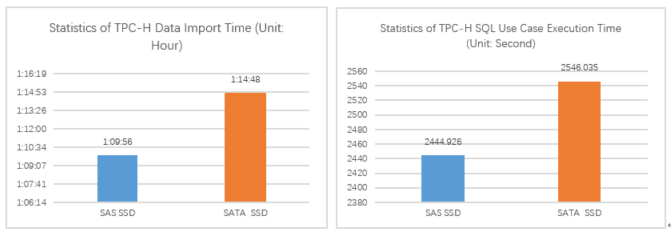

3.4 Performance of Union Memory SAS SSD under TCP-H Scenario

Fig. 6: Performance of Union Memory SAS SSD under TCP-H Scenario

TPC-H test is to load data to GBase database shardings via FTP protocol. Fig. 6 shows the performance of Union Memory SAS SSDs and SATA SSDs under TPC-H test. The total time of Union Memory SAS SSDs is slightly less than that of SATA SSDs, the data import time of Union Memory SAS SSDs is about 6% less than that of SATA SSDs, and the execution time of SQL use case with Union Memory SAS SSDs is reduced about 3% than that of SATA SSDs.

The verification fully demonstrates the performance advantages of Union Memory SAS SSDs under GBase 8a MPP scenarios. Union Memory SAS SSDs can effectively support efficient services, with advantages in higher bandwidth for a single disk and better performance of degraded and reconfigured disks at fault, not only help enterprises save hardware procurement costs, but also solve the problem of big data storage and computing under massive data, and enable efficiently processing of massive structured data.

Union Memory has been deeply engaged in the field of solid-state drives for individual and enterprise users for many years, and has released a variety of high-performance and high-reliability products, which have the capability to cope with complex service environments and database challenges, and can meet the requirements of industrial users for processing massive data. Union Memory will continue to work with GBase to create new storage solutions in the context of digital transformation in our times.