In recent years, with the rapid development and application of new technologies such as cloud computing, 5G, artificial intelligence, the Internet of Things, and AI, the data scale has grown exponentially. Traditional centralized storage solutions cannot meet the needs of large-scale storage applications due to drawbacks such as reliability, security, and low data utilization. In this context, distributed storage emerges. Distributed storage has become an important choice for building storage infrastructure in cloud environments due to its advantages such as easy scalability, high performance, cost-effectiveness, support for tiered storage, multi-replica consistency, and standardized storage system.

Distributed storage can be divided into block storage, file storage, and object storage. Among them, block storage mainly provides elastic volume services for computing cluster virtual machines and containers. As the most critical storage service on current cloud platforms, Elastic Volume Service (EVS) has extremely high requirements on storage performance. On this basis, major cloud vendors generally adopt all-flash storage technology for deployment. Solid State Drives (SSDs) use flash memory as the storage medium, and have advantages such as fast read and write speed, low latency, and good vibration resistance compared to Hard Disk Drives (HDDs). SSDs are widely used in distributed storage.

Ceph is currently the most widely used open-source distributed storage software in the industry, featuring high scalability, high performance, and high reliability. It supports block storage, file storage, and object storage interfaces, and has been widely applied in IAAS platforms for cloud computing. In order to better help improve the performance and reliability of distributed block storage systems, Union Memory has carried out field tests under Ceph platform.

Ceph is a unified distributed storage system that provides excellent performance, reliability, and scalability. It is an important underlying storage system for cloud computing and has been widely adopted by domestic operators and government and applied in various industries such as finance, and the Internet.

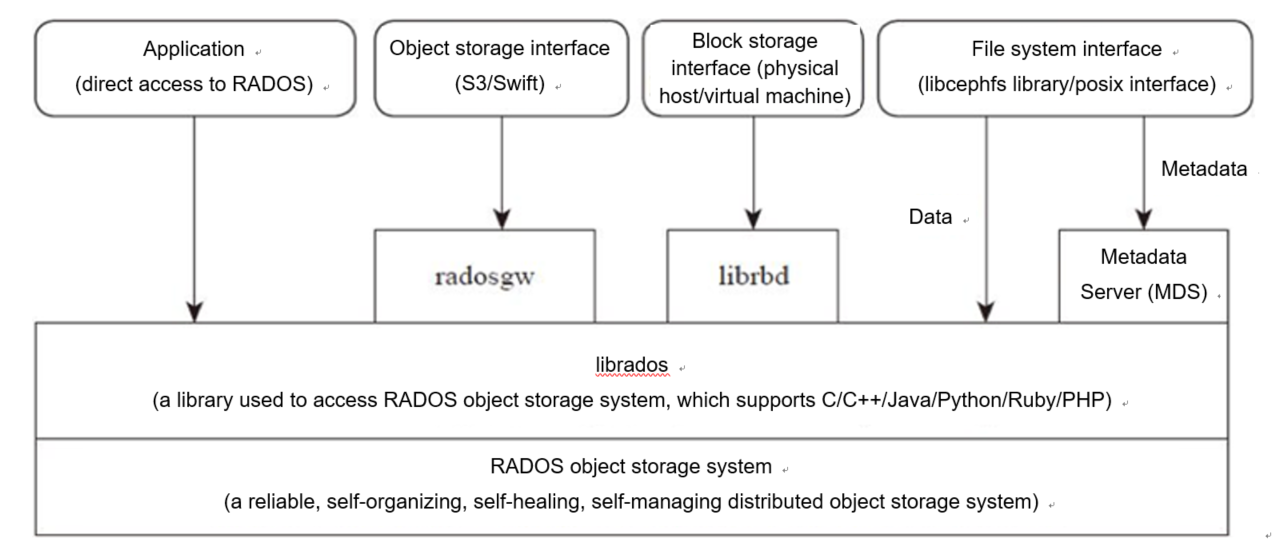

Figure 1: Technical architecture of Ceph

Ceph is mainly divided into the application interface layer, basic storage interface layer, and storage object layer. The interface layer is responsible for client access and is divided into local language binding interface, block storage device interface, and file system interface, demonstrating the unity of Ceph.

In the Ceph block storage system, data is stored in volumes in the form of blocks. Blocks provide large storage capacity to applications, with higher reliability and performance. Volumes can be mapped to the operating system and are controlled by the file system layer. The RBD (Ceph Block Device) protocol introduced in the Ceph block storage system provides clients with highly reliable, high-performance, and distributed block storage. Additionally, RBD supports other enterprise-grade features such as full and incremental snapshots, simplified configuration, copy-on-write cloning, and all-in-memory cache, greatly enhancing its performance.

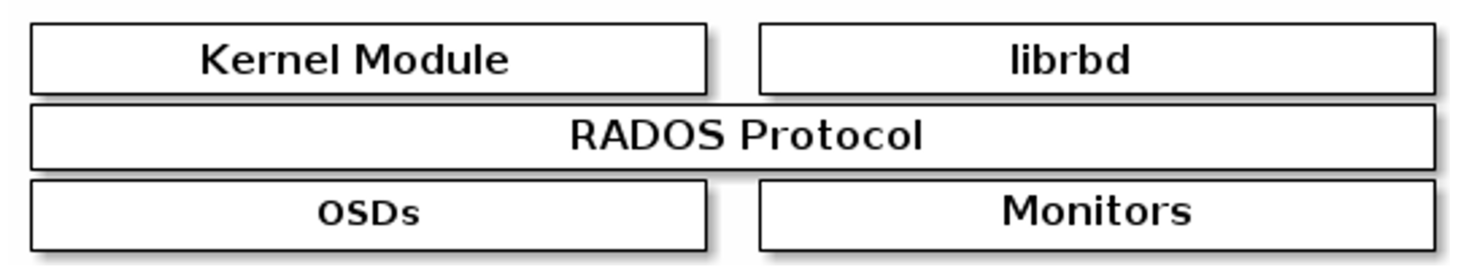

Figure 2: Block storage components of Ceph

|

Type |

Model |

Hardware configuration |

|

Server |

2U2-way server |

CPU: Intel Xeon Gold 6336Y @2.4GHz |

|

Memory: 12 * 16GB |

||

|

Storage controller: supports RAID 1 (system disk) |

||

|

Network adapter: 2*2 ports 25GE Ethernet card |

||

|

Hard disc |

Union Memory SSD |

System disk: 2 * 480GB SATA Data disk: 8 * 7.68 NVMe SSD (UH811a) |

|

Switch |

25GE switch |

48-port 25GE switch |

|

Type |

Model |

Version |

|

Operating System |

CentOS (x86) |

7.6 |

|

Storage software |

Ceph (open source) |

12.2.8 luminous |

|

FIO |

IO testing |

3.7 |

|

SAR |

Network monitoring |

10.1.5 |

|

IOSTAT |

Disk-side I/O statistics |

10.0.0 |

|

MPSTAT |

CPU utilization |

10.1.5 |

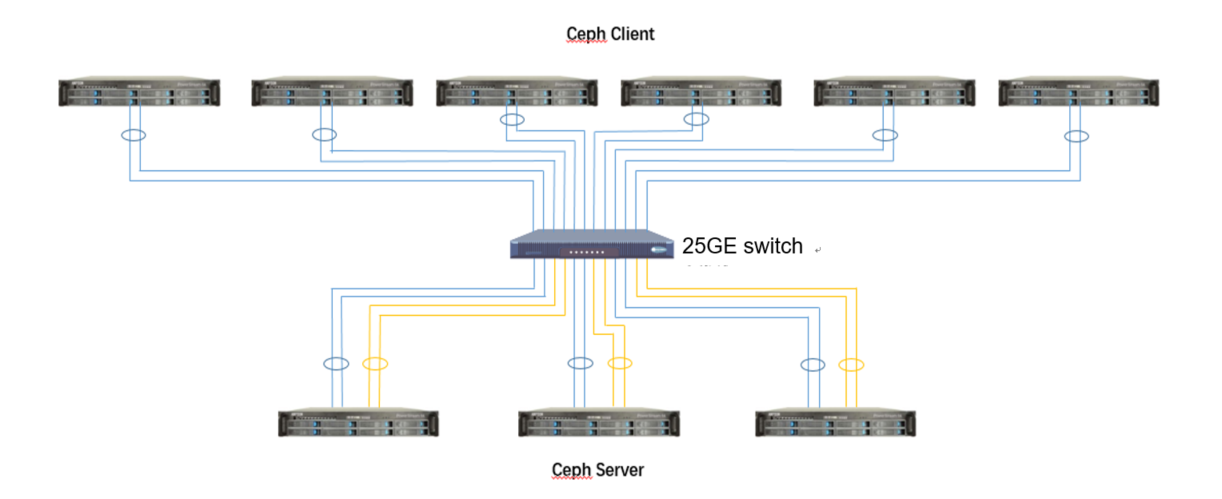

Figure 3: Networking plan architecture of Ceph

Ø Step 1: Create RBD pool and RBD volume. The configuration parameter osd_pool_default_size determines the number of copies of the RBD Pool. "2" means two copies, and "3" means three copies. You can add this configuration item to ceph.conf according to the required number of copies. In addition, according to the plan, 60 RBD volumes with a size of 100GiB need to be created for IO testing.

Ø Step 2: Before issuing IO testing orders, monitor IO, CPU and network of server and client, and collect data every 2 seconds.

Ø Step 3: Send IOs to 60 RBD volumes at the same time on 6 clients. Each client needs to bind cores, that is, each fio binds a different cpu core.

Ø Step 4: After completing the IO testing, shut down the monitoring on IO, CPU and network of server and client.

Ø Step 5: Upon completing the testing, summarize BW, IOPS, and average latency of FIOs of all clients, and summarize corresponding monitoring data. For BW and IOPS, you can simply sum up the corresponding results of each FIO; for average latency, you need to calculate the average value after summing up the individual latencies.

The verification results in the Ceph scenario are as follows:

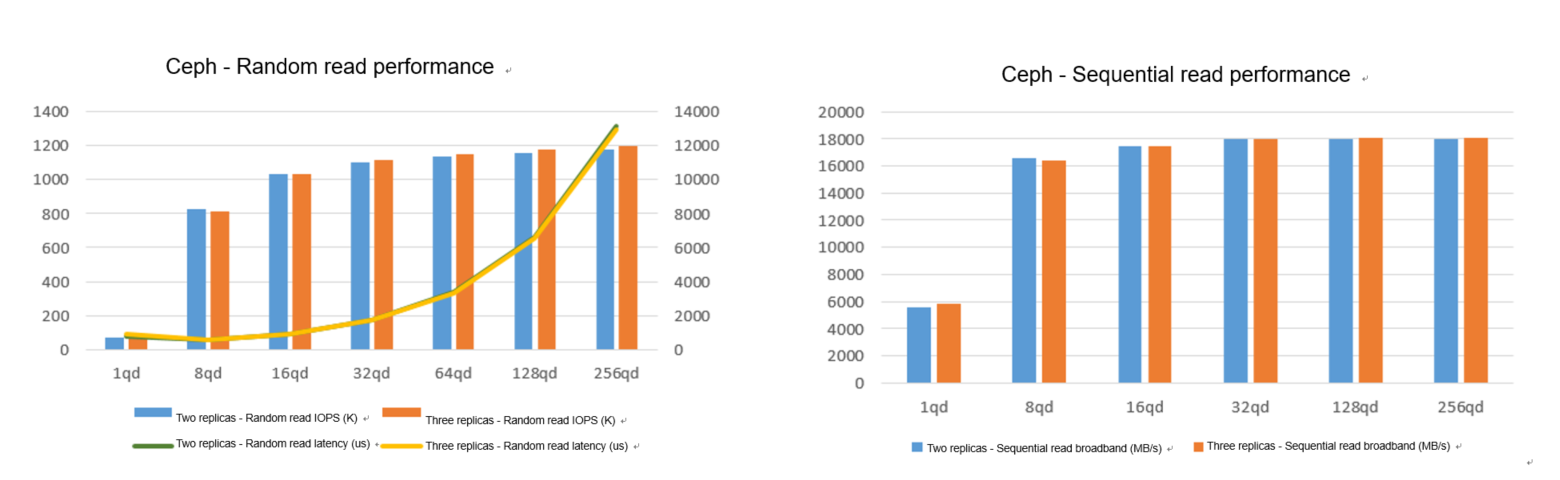

Figure 4: Read performance of Union Memory's UH8 Series SSDs in Ceph scenario

As can be seen from Figure 4, in the Ceph distributed storage system, the read performance of Union Memory's UH8 Series SSDs is basically the same for two-replica configuration and the three-replica configuration. In terms of latency, the trends of two-replica configuration and the three-replica configuration are basically the same. The latency remains relatively stable between 1QD and 32QD. However, after reaching 32QD, the latency increases significantly.

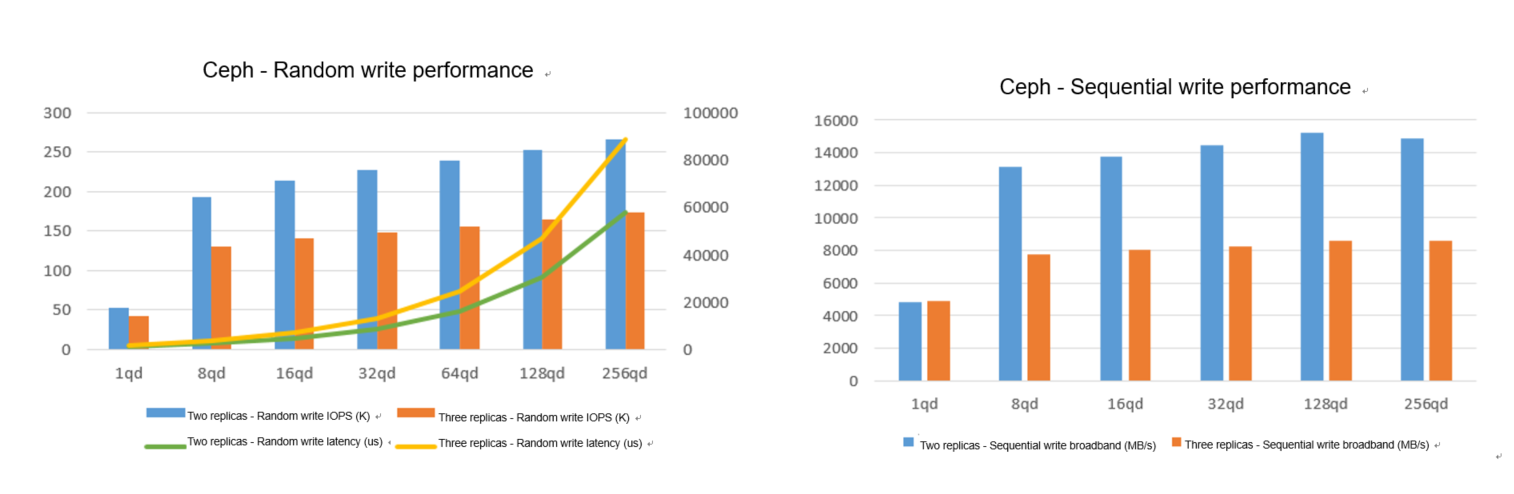

Figure 5: Write performance of Union Memory's UH8 Series SSDs in Ceph scenario

As shown in Figure 5, write performance of two-replica configuration is significantly higher than that of the three-replica configuration no matter in random write or sequential write scenarios. This is primarily attributed to the network and storage burden incurred by replica replication, leading to a noticeable decline in both write bandwidth and write IOPS for three-replica configuration. In terms of overall latency performance, the two-replica configuration is obviously better than the three-replica configuration. In terms of latency change, two-replica and three-replica configurations exhibit a similar trend, with both experiencing a significant increase after reaching 32QD.

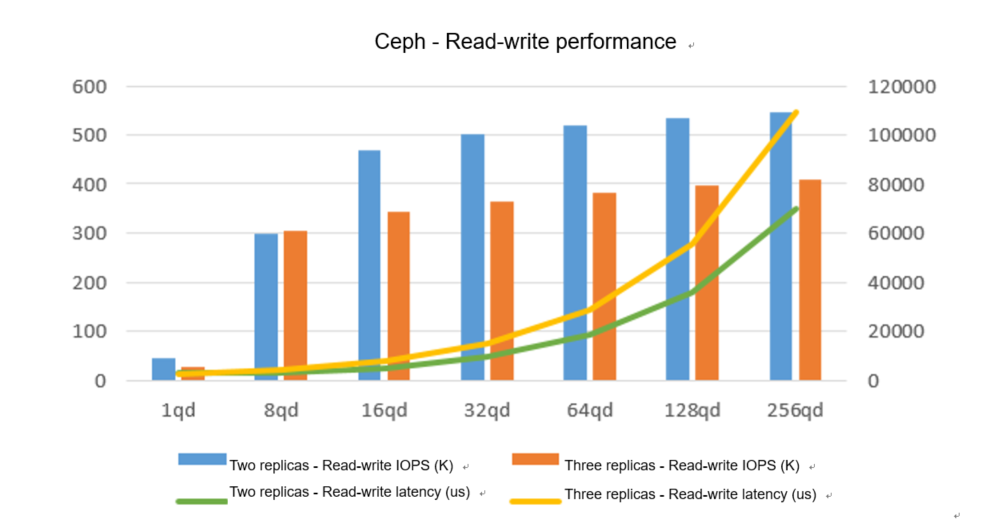

As can be seen from Figure 6, in the 4K 7:3 read-write processing scenario, the IOPS performance of two-replica configuration is better than that of three-replica configuration from 16QD onwards. In terms of overall latency performance, the two-replica configuration is lower than the three-replica configuration. However, in terms of the latency change trend, the two-replica configuration and the three-replica configuration are basically the same. The latency starts to increase significantly from 32QD.

Conclusion:

From the measured data of read, write, and read-write processing scenarios in Ceph environment, it can be seen that the overall performance of the Union Memory's SSDs is excellent. It is concluded that Union Memory's SSDs can provide extreme storage performance for the Ceph environment. In terms of latency, Union Memory's SSDs perform brilliantly in scenarios of 32QD and below. Thus, you can obtain a better latency experience.

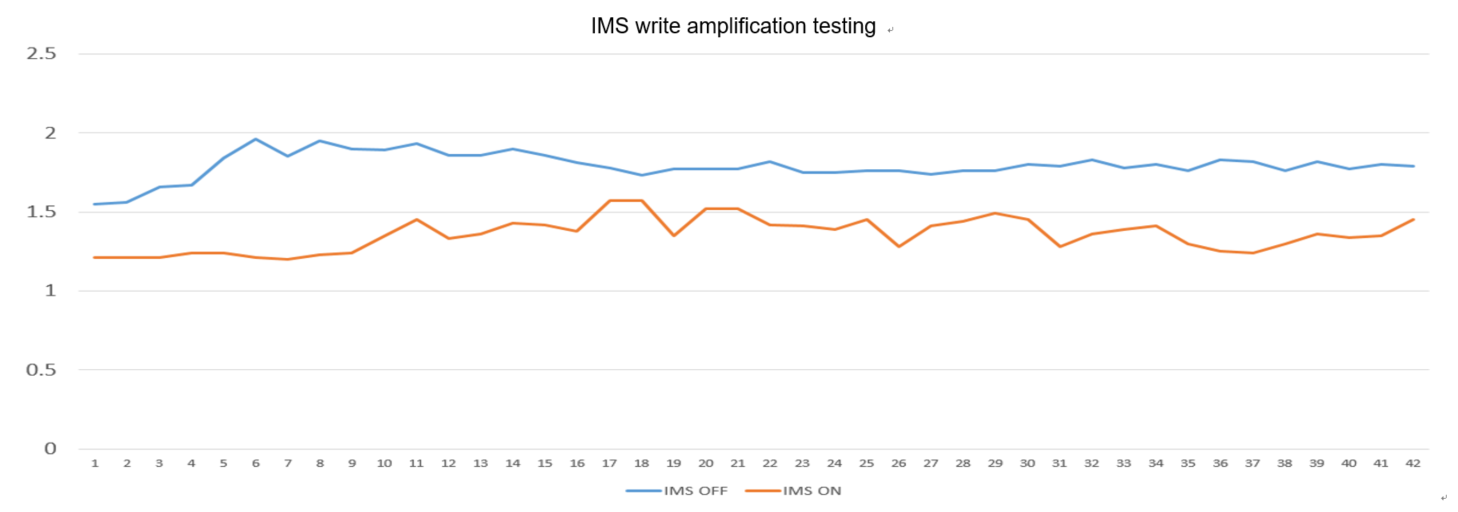

To better address the performance degradation and reduced endurance of SSDs caused by write amplification due to read and write mechanisms, Union Memory has introduced an intelligent multi-stream feature for SSDs. By utilizing an intelligent hot-cold data classification algorithm, it effectively improves the efficiency of garbage collection (GC), reduces write amplification, and enhances SSD performance.

Figure 7: Intelligent multi-stream feature testing in Ceph scenario

In the Ceph scenario, based on the standard JESD 219 business model, write amplification tests were conducted by enabling and disabling the intelligent multi-stream feature for SSDs. By comparing the verification results in Figure 7, it can be observed that with Union Memory's Intelligent Multi-Stream (IMS) enabled, write amplification is reduced by more than 20%, significantly improving the endurance of the SSDs.

This verification clearly demonstrates the stable performance of Union Memory's SSDs in the Ceph distributed storage system, and shows that Union Memory's SSDs can effectively meet the storage requirements of Ceph. Union Memory's SSDs consistently deliver high performance, making them the ideal choice for software-defined storage solutions. In addition, the unique intelligent multi-stream technology of the Union Memory's SSDs can reduce SSD write amplification in distributed storage scenarios, improve SSD endurance, and help users reduce the overall TCO. For the Ceph distributed storage system, Union Memory's SSDs provide a storage solution that truly achieves high performance, high reliability, and low cost.